The AI industry’s need for data center compute is driving demand for massive supercomputers, with clusters of over one million chips now being talked about. We discuss how this trend could impact companies like NVIDIA (Nasdaq: NVDA) and AMD (Nasdaq: AMD) and the trends driving this push for such massive AI computing clusters.

Why One Million Chip Supercomputers Are Coming

Here are some highlights from the discussion between 24/7 Wall Street Analysts Eric Bleeker and Austin Smith.

- In an interview with The Next Platform, AMD’s General Manager of Data Centers Forrest Norrod gave some stunning quotes about demand for chips in AI computing clusters.

- Here’s the quote from Norrod below:

“TPM: What’s the biggest AI training cluster that somebody is serious about – you don’t have to name names. Has somebody come to you and said with MI500, I need 1.2 million GPUs or whatever.

Forrest Norrod: It’s in that range? Yes.

TPM: You can’t just say “it’s in that range.” What’s the biggest actual number?

Forrest Norrod: I am dead serious, it is in that range.

TPM: For one machine.

Forrest Norrod: Yes, I’m talking about one machine.

TPM: It boggles the mind a little bit, you know?

Forrest Norrod: I understand that. The scale of what’s being contemplated is mind blowing. Now, will all of that come to pass? I don’t know. But there are public reports of very sober people are contemplating spending tens of billions of dollars or even a hundred billion dollars on training clusters.”

- So, to emphasize, the head of AMD’s Data Centers is saying they’ve engaged with customers who are discussing data centers that have a million processors in just one machine!

- Running the numbers – a top-of-the-line GPU might sell for $25,000, which would imply these massive computers would have $25 billion worth of just GPUs alone, on top of that would be plenty of other costs like networking, memory, and server costs.

- What’s amazing is we’re hearing about these size of computers from more than just AMD. In a recent conference call Broadcom (Nasdaq: AVGO) discussed state-of-the-art AI clusters going from about 4,000 processors to upward of a million being discussed.

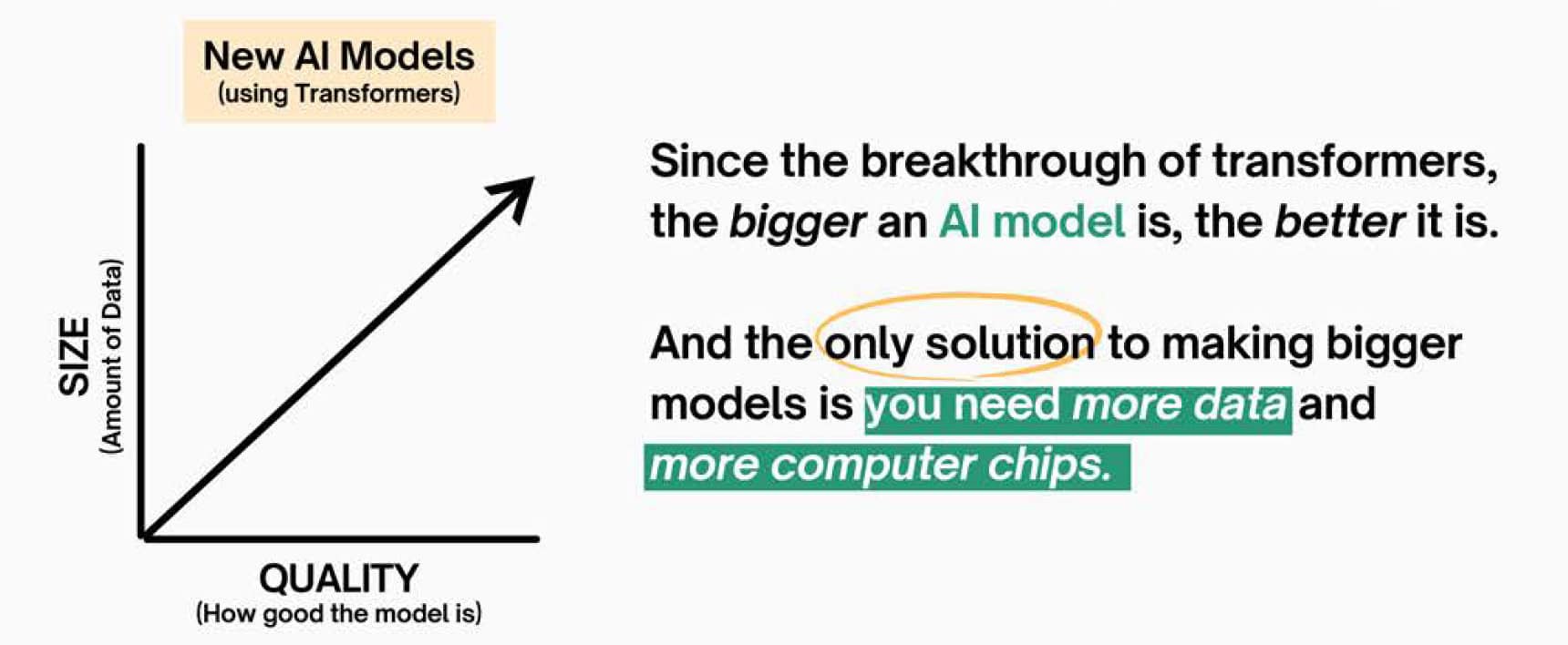

- Why is this happening? As displayed in the chart below, right now there is something close to a linear relationship between the size of data fed into models and the quality of AI models. So, right now more data is needed, and more computer chips (specifically GPUs and other custom chips) as the bottlenecks to advancing AI.

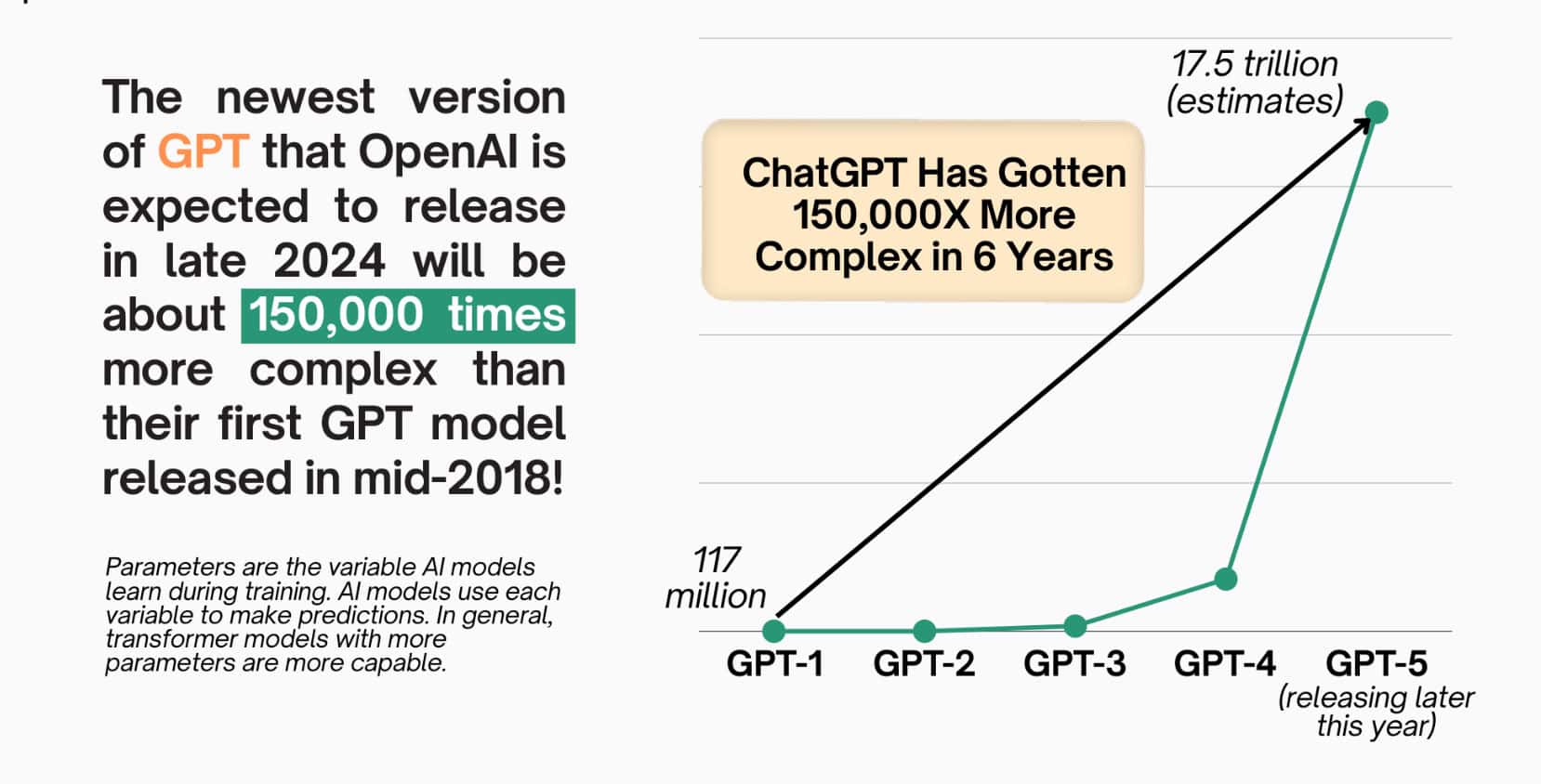

- Taken even further, we could display the complexity of cutting-edge AI models. The first ChatGPT has about 117 million parameters. It’s estimated that ChatGPT 5 (their next version) will have about 17.5 trillion parameters. As you can imagine, the larger these models become the more compute is needed to train them.

- The bottom line: most of these “supercomputers” are still in concept stages. We’ve heard chatter of Microsoft looking at a “Project Stargate” that could cost upward of $100 Billion.

- This shows why training is so promising relative to inferencing (actually “running” AI models), there’s an arm’s race with plenty of companies with backing that are going for artificial general intelligence. There’s really no concept today of what the upside could be.

100 Million Americans Are Missing This Crucial Retirement Tool

The thought of burdening your family with a financial disaster is most Americans’ nightmare. However, recent studies show that over 100 million Americans still don’t have proper life insurance in the event they pass away.

Life insurance can bring peace of mind – ensuring your loved ones are safeguarded against unforeseen expenses and debts. With premiums often lower than expected and a variety of plans tailored to different life stages and health conditions, securing a policy is more accessible than ever.

A quick, no-obligation quote can provide valuable insight into what’s available and what might best suit your family’s needs. Life insurance is a simple step you can take today to help secure peace of mind for your loved ones tomorrow.

Click here to learn how to get a quote in just a few minutes.

Thank you for reading! Have some feedback for us?

Contact the 24/7 Wall St. editorial team.