Last week, social media giant Facebook Inc. (NASDAQ: FB) announced the creation of an independent panel that was immediately dubbed the company’s “supreme court.” The names of 20 members were announced, along with a promise that another 20 would be named in coming days.

The stock market yawned. The S&P 500 rose by about 1.5% the day the company made its announcement, while Facebook shares rose by about 0.5%. If a company’s stock price reveals everything an investor needs to know about a company, then investors don’t believe Facebook’s supreme court will make much difference to its stock price, or they think that the Oversight Board (its official name) has been given an impossible task that has little chance of succeeding.

What Is Facebook’s Oversight Board?

Facebook’s new Oversight Board has been formed “to exercise independent judgment over some of the most difficult and significant content decisions” that the company faces. The company has established a trust over which it has no control to rule on appeals from the social network’s users on posts taken down by the company.

While that sounds straightforward, it is more complex than that. If the Oversight Board is able to do its job, that will happen despite the social network, not because of it.

Facebook’s strategy always has been to corral more users. To do that at the scale the company has managed required that it suspend judgment over what its users say. To continue to grow its user base (and its advertising revenues) means continuing to suspend those judgments.

Will the Oversight Board be willing to bite the hand that feeds it? Even if it is, how much will Facebook feel the bite? When Facebook reported earnings two weeks ago, it said over 3 billion people were active users of Facebook, Instagram, WhatsApp or Messenger each month. Daily active users rose by 11% to 1.73 billion.

What Can the Oversight Board Do?

Earlier this week, the company released its Community Standards Enforcement Report. Facebook took action by removing content on 9.6 million posts during the first quarter of this year. Nearly 90% of the company’s actions were proactive; that is, not the result of a complaint by another user.

The company also reported that 1.3 million of those actions were appealed. Of that number, 63,600 posts were restored.

The Oversight Board will not be responsible for reviewing those actions. Its role is to establish a framework that Facebook must use to make decisions about removing content. The actual implementation of that framework will be left up to Facebook.

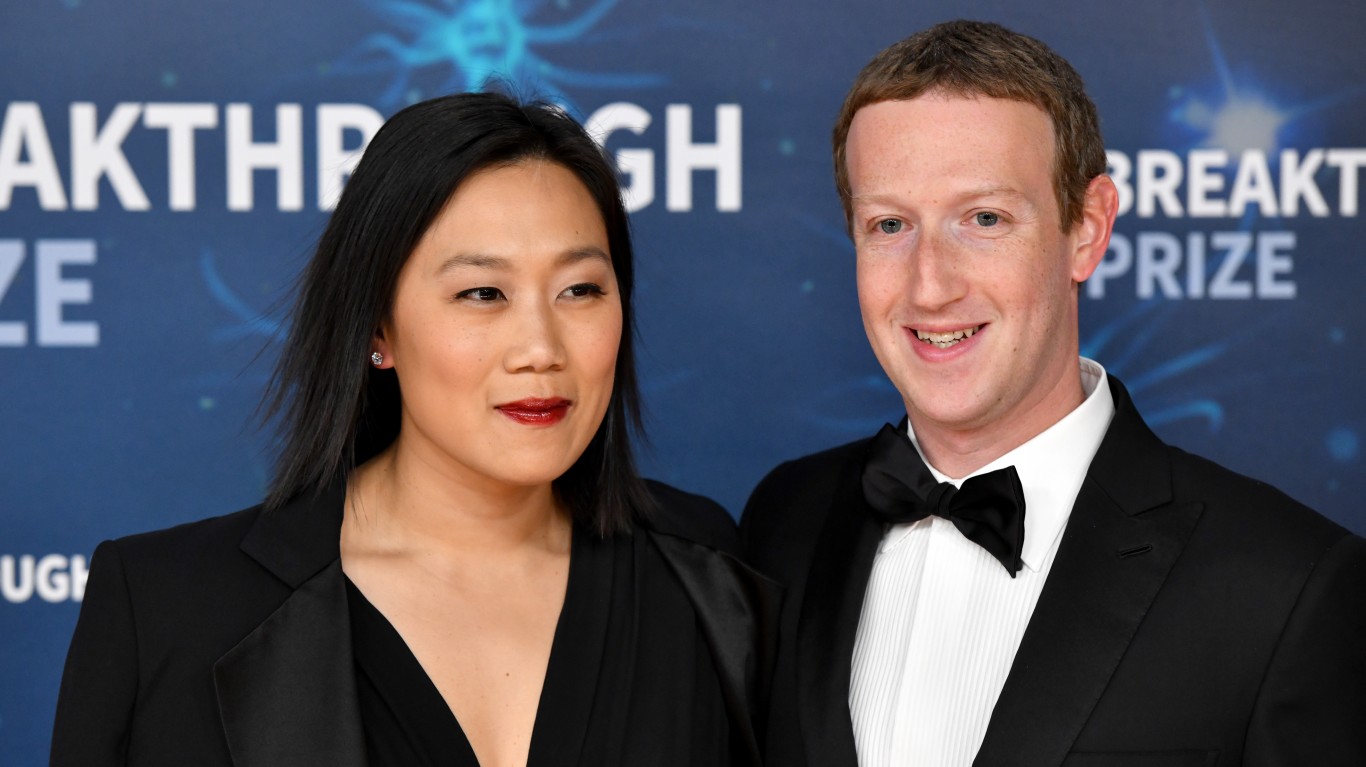

It’s hard to shake the feeling that Facebook wants to put responsibility for its social conscience somewhere outside the company’s management. When the Oversight Board was announced, Facebook CEO Mark Zuckerberg said, “Facebook should not make so many important decisions about free expression and safety on our own.”

On one hand that sounds enlightened. On the other hand, it sounds like a dodge.

Can Corporations Develop a Social Conscience?

Last August, the Business Roundtable, a group of 181 U.S. CEOs who lead companies with a total of 16 million employees and more than $7 trillion in annual revenues, issued a statement proclaiming that from now on their companies would be managed “for the benefit of all stakeholders – customers, employees, suppliers, communities and shareholders.”

The statement replaced this 1997 Business Roundtable statement on corporate governance:

The paramount duty of management and of boards of directors is to the corporation’s stockholders. The interests of other stakeholders are relevant as a derivative of the duty to stockholders.

Surely, it is in the best interests of Facebook stockholders to maximize value by encouraging more users to produce more content (free to Facebook) around which advertising space can be sold.

Is that in the best interests of Facebook’s stakeholders though? Can Facebook stakeholders even be identified? Is it just the platform’s users, or do national governments have a stake in what appears on Facebook or Instagram?

The French parliament on Wednesday passed a law requiring social media and other online content generators to remove pedophile and terrorism-related content from their websites within one hour or face a fine of up to 4% of their global revenue.

In Facebook’s case, on first-quarter revenue of $17.4 billion, the company could be fined up to $696 million for failing to remove such content.

Content determined to be “manifestly illicit” must be removed within 24 hours. One French civil liberties group said that rather than trying to limit speech, laws should address the business models of the social media networks.

That’s the essential conflict: the interests of the free market and the wider interests of society. Expecting a solution from Facebook that satisfies both sides is unlikely to produce the desired result. The market is more likely to prevail.

It’s Your Money, Your Future—Own It (sponsor)

Retirement can be daunting, but it doesn’t need to be.

Imagine having an expert in your corner to help you with your financial goals. Someone to help you determine if you’re ahead, behind, or right on track. With SmartAsset, that’s not just a dream—it’s reality. This free tool connects you with pre-screened financial advisors who work in your best interests. It’s quick, it’s easy, so take the leap today and start planning smarter!

Don’t waste another minute; get started right here and help your retirement dreams become a retirement reality.

Thank you for reading! Have some feedback for us?

Contact the 24/7 Wall St. editorial team.

24/7 Wall St.

24/7 Wall St.