Hospitals of today are places of hope and innovation. They are a far cry from the institutions of the early 20th century, where conditions were unsanitary and the patients were mostly poor. One-hundred years ago, the public associated hospitals with places where people went to die.

In order to find out what hospitals looked like 100 years ago, 24/7 Wall St. reviewed sources such as drug information resource Monthly Prescribing Reference and the National Library of Medicine National Institutes of Health, as well as media sources such as U.S. News & World Report and PBS.com to compile our list.

Click here to see what a hospital looked like 100 years ago.

The American hospital system grew out of the creation of the almshouse, a charity home that tended to the needs of the mentally ill, the blind, the deaf, those with ailments such as tuberculous, as well as petty thieves, prostitutes, and abandoned children. These places did not provide medical services. The nation’s first institution founded to treat medical conditions was the Pennsylvania Hospital in 1751.

For much of the 19th century, hospitals tended mostly to the poor; the more well to do were treated in their homes by physicians. As the nation became more industrialized and mobile — making it more difficult for families to care for each other — and as health care became more sophisticated and professional, Americans in all economic strata began to use hospitals.

Most of the hospitals of the 19th century were created by Protestant stewards, who took it upon themselves to look after the poor. Those hospitals advanced the concept of the American hospital. Newly arrived immigrants, however, found them lacking, and they founded their own medical institutions to respond to cultural considerations.

In addition to the impact of immigration, hospitals were changing rapidly 100 years ago because of technological innovation and policy changes driven by progressive reformers that made health care an important public policy issue.

One hundred years ago, America was reeling from the impact of the influenza epidemic. It was one of the worst pandemics in history. These are the worst outbreaks of all time. But out of that bleak episode would emerge a surge in the construction and funding of public hospitals, more widespread use of technology such as X-rays, as well as the discovery of vaccines for tuberculosis, tetanus, and yellow fever.

Even though hospitals have become an integral part of American communities, there are still areas in the country that lack them. Here are the counties with the fewest hospitals.

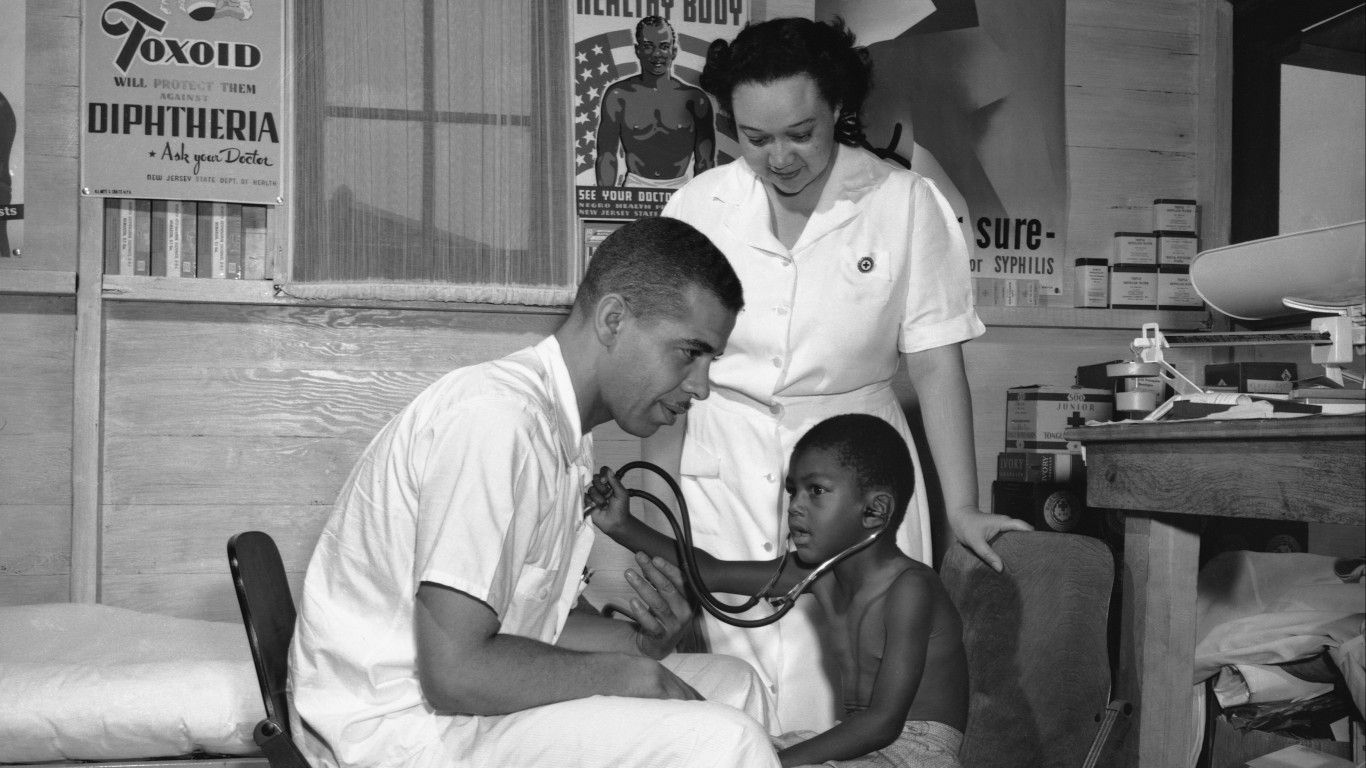

1. Segregation

Hospitals in many states were segregated by race until the late 1960s, and hospitals used by nonwhites had inadequate facilities. Some hospitals were also segregated by gender.

[in-text-ad]

2. Public aid

Most of the hospitals were in cities — cities in the United States created so-called isolation hospitals in the 18th century — and these institutions began to receive public aid in the early 20th century.

3. Used mostly by the poor

Until the 20th century, hospitals were places associated with the poor and where people went to die. The wealthy were treated at their homes by doctors who made house calls 100 years ago. Physicians were not paid by hospitals. They volunteered to treat the poor to help build their reputation.

4. Religious-affiliated hospitals

Religious groups, mostly in the Northeast, opened their own hospitals to accommodate dietary restrictions not met at other hospitals. Catholic hospitals didn’t serve meat on Fridays, and meals at Jewish institutions were kosher.

[in-text-ad-2]

5. No pain management

Hospitals today devote much attention to managing pain, as patients are treated with opioids and other medications. That was not the case 100 years ago, when pain care was overlooked.

6. Longer stays

If women gave birth in a hospital 100 years ago, they could stay there as many as 10 days. Today, women are discharged one day after giving birth, if there are no complications.

[in-text-ad]

7. No nurse practitioners

There were no nurse practitioners 100 years ago. Nurse practitioners have relieved doctors from responsibilities of prescribing medications, diagnosing diseases, and starting treatment.

8. Smoking on premises

Hard to believe now, but smoking was common throughout hospitals 100 years ago. People smoked in cafeterias and waiting rooms. Mayo Clinic in Rochester, Minnesota, was the first hospital in the United States to ban smoking on its campus in the early 2000s.

9. Technology

Technology has raised the number of outpatient surgical procedures. Today, some patients with kidney disease can have their dialysis treatments at home.

[in-text-ad-2]

10. Doctor’s orders

One hundred years ago, doctors had the last word on treatment and patients had no say. Doctors withheld information from patients under the belief that informing them of their condition would cause psychological damage. Physicians also didn’t think patients could understand and or would be able to make decisions about their treatment.

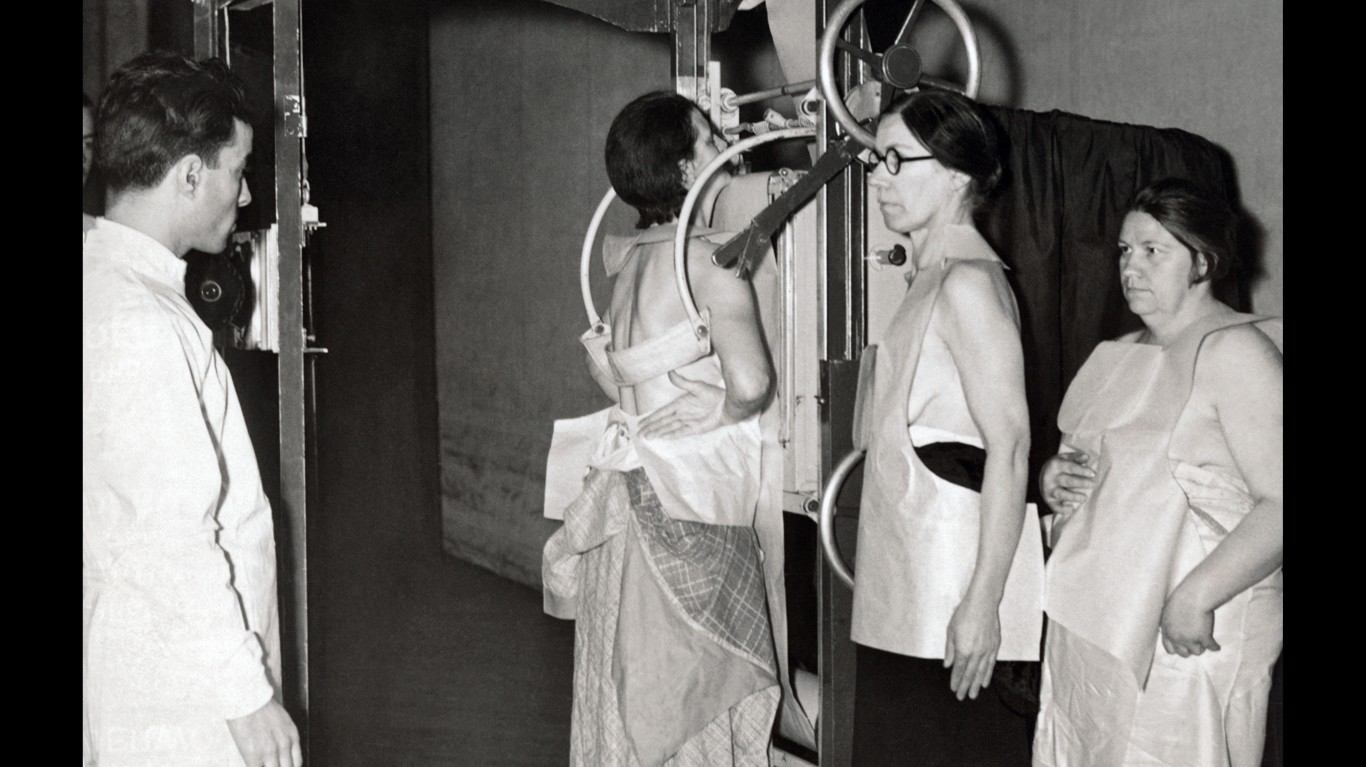

11. Little X-ray protection

There was little protection during X-ray procedures in the early part of the 20th century.

[in-text-ad]

12. Operations in street clothes

Even though doctors understood the importance of cleanliness during an operation, many performed procedures in their street clothes and an apron.

13. Few babies born in hospitals

Most babies were born at home 100 years ago and delivered with the aid of a midwife or doctor. The first “maternity hospital” opened in 1914.

14. Elderly cared for at home

When the elderly got sick they were cared for at home by their families instead of in hospitals.

[in-text-ad-2]

15. Sterilization standards lower

Doctors would scrub their hands before a procedure, but they did not wear rubber gloves. Surgical masks were not worn. Surgical instruments were cleaned in boiling water, which killed most germs, but might have left some spores. Today, hospitals sterilize instruments through a process called autoclaving (saturated steam under high pressure) that eradicates microorganisms and spores.

16. More public hospitals

There was a surge of public hospital funding and construction in the early part of the 20th century. Census data from that time indicates greater awareness for the need for public support for hospitals.

[in-text-ad]

17. Centers of nursing

While there were still no nurse practitioners 100 years ago, nurses were becoming more critical to the operation of hospitals 100 years ago, and hospitals became centers for nursing education.

18. Public viewed operations

Surgeries could be viewed by the public in the 19th and early 20th centuries in open-air operating theaters, with nothing separating the public and the surgeons.

19. Multi-bed wards in use

The perception of hospitals as unsanitary places for the destitute was changing in the early 20th century. However, hospitals still had multi-bed wards. Hospital officials made various attempts to improve the ward configuration and included so-called quiet rooms. Hospitals were beginning to address the need for private rooms in public hospitals to separate those with infectious diseases.

[in-text-ad-2]

20. Blood manually pumped out

If a surgeon wanted to get a blood-free view of the body part where he was going to operate (nearly all surgeons were male at the time), a member of the operating group used a hand-cranked pump to suck the blood out. Today, an electrically powered vacuum performs the task.

21. Bloodletting seen as cure

We associate bloodletting as a treatment for disease hundreds of years ago. However, the procedure was still used in the early 20th century.

[in-text-ad]

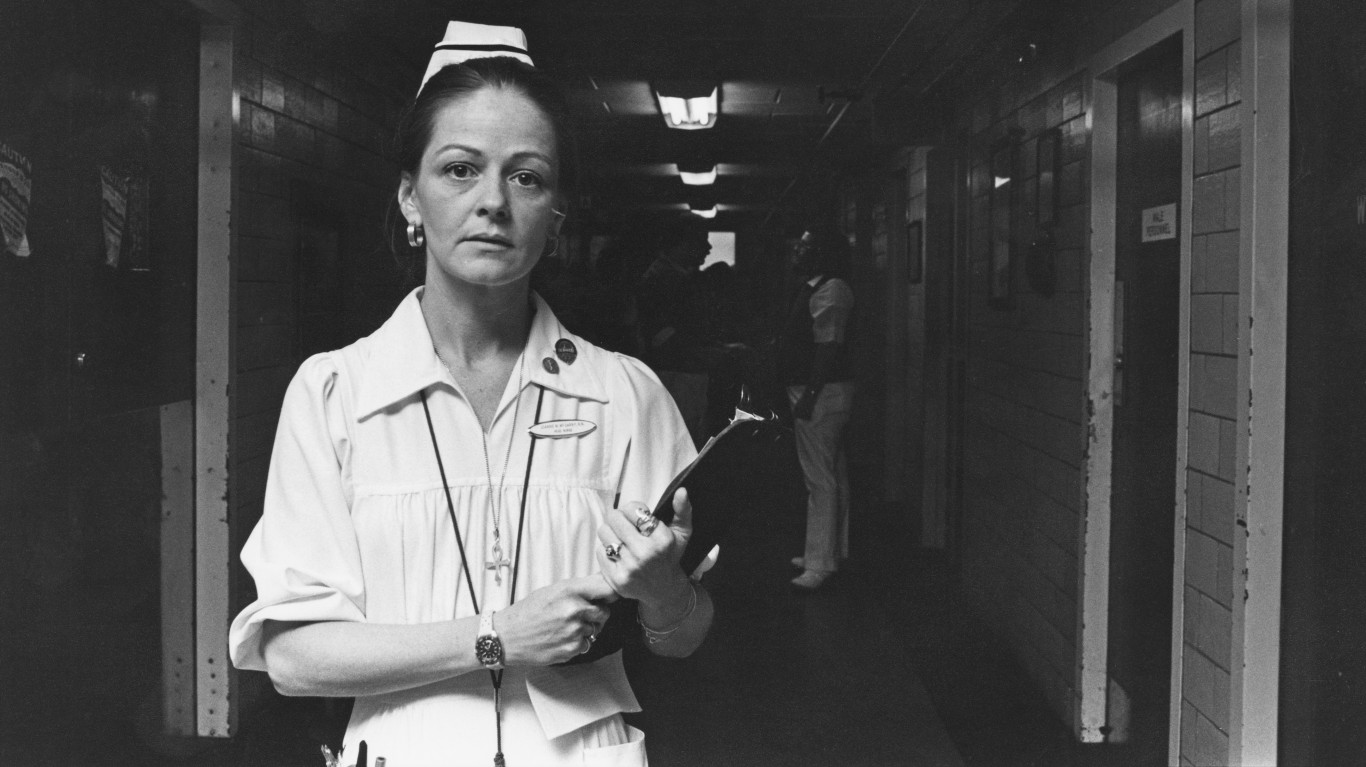

22. Nurses wore hats

Until the 1980s, nurses wore small white caps as part of their uniform. This ended when nurses began wearing scrubs.

23. More anesthesia use

Anesthesia was being used more frequently and efficiently 100 years ago. In 1914, Dr. Dennis E. Jackson developed a carbon dioxide-absorbing anesthesia system that used less anesthetic than previous methods.

24. For-profit hospitals in South

Hospitals in the South lacked the wealthy donors of the North, and many were for-profit institutions and were physician-owned.

[in-text-ad-2]

25. Little regulatory oversight

Hospitals were increasing in number in the United States 100 years ago, but there was no regulation of the industry. Hospitals were free to oversee their own expenses, what to charge patients, and how to collect the bill.

26. Band-Aids just coming on market

The Band-Aid was invented by Earle Dickson, a cotton buyer for Johnson & Johnson. He made the bandage because his wife was accident-prone. At the time people created bandages with gauze and tape. Johnson & Johnson put Band-Aid on the market in 1921.

[in-text-ad]

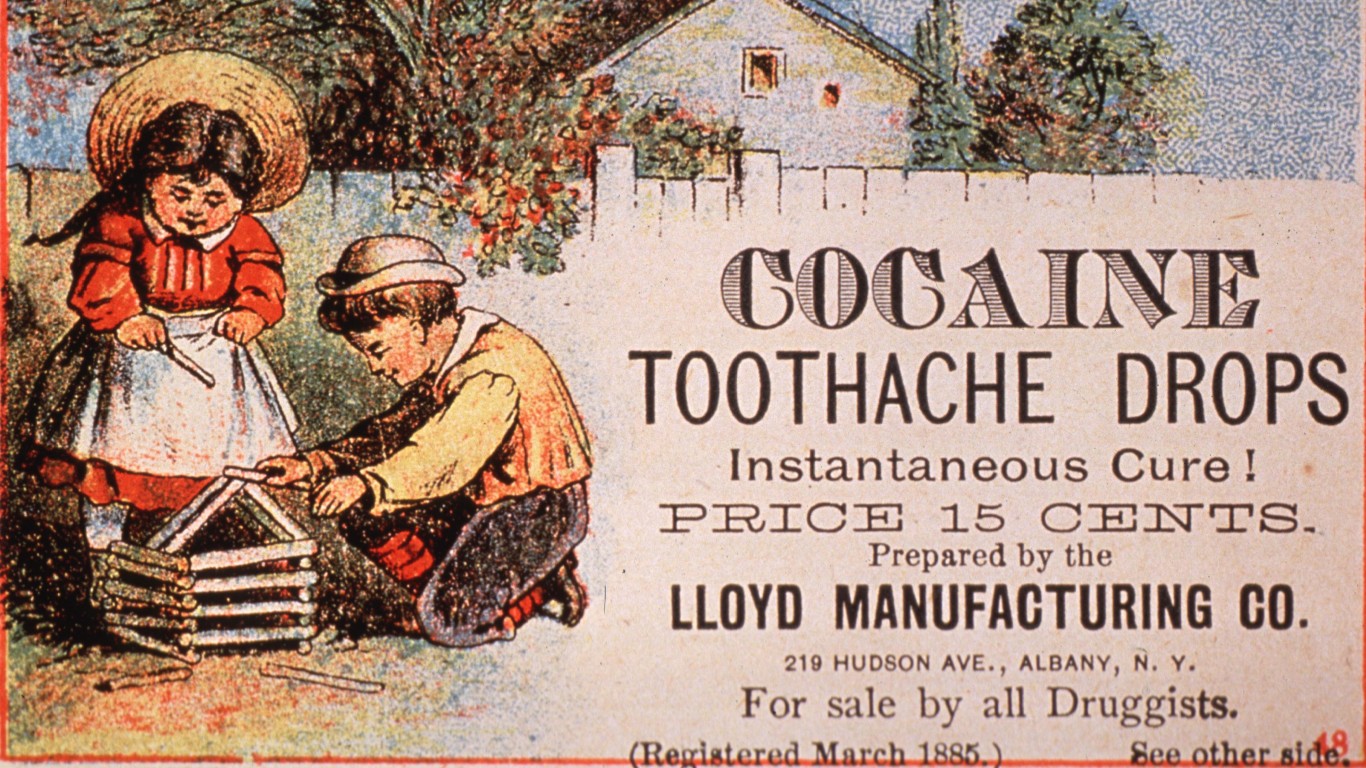

27. Cocaine used as wonder drug

We know cocaine as a drug that is used recreationally. In the first part of the 20th century, it was used in hospitals to treat hemorrhoids, indigestion, tooth aches, appetite suppression, and fatigue.

28. Malaria used as treatment

In the early 20th century, doctors injected malaria germs into the bloodstream of patients to treat syphilis.

29. Fewer medicine treatments

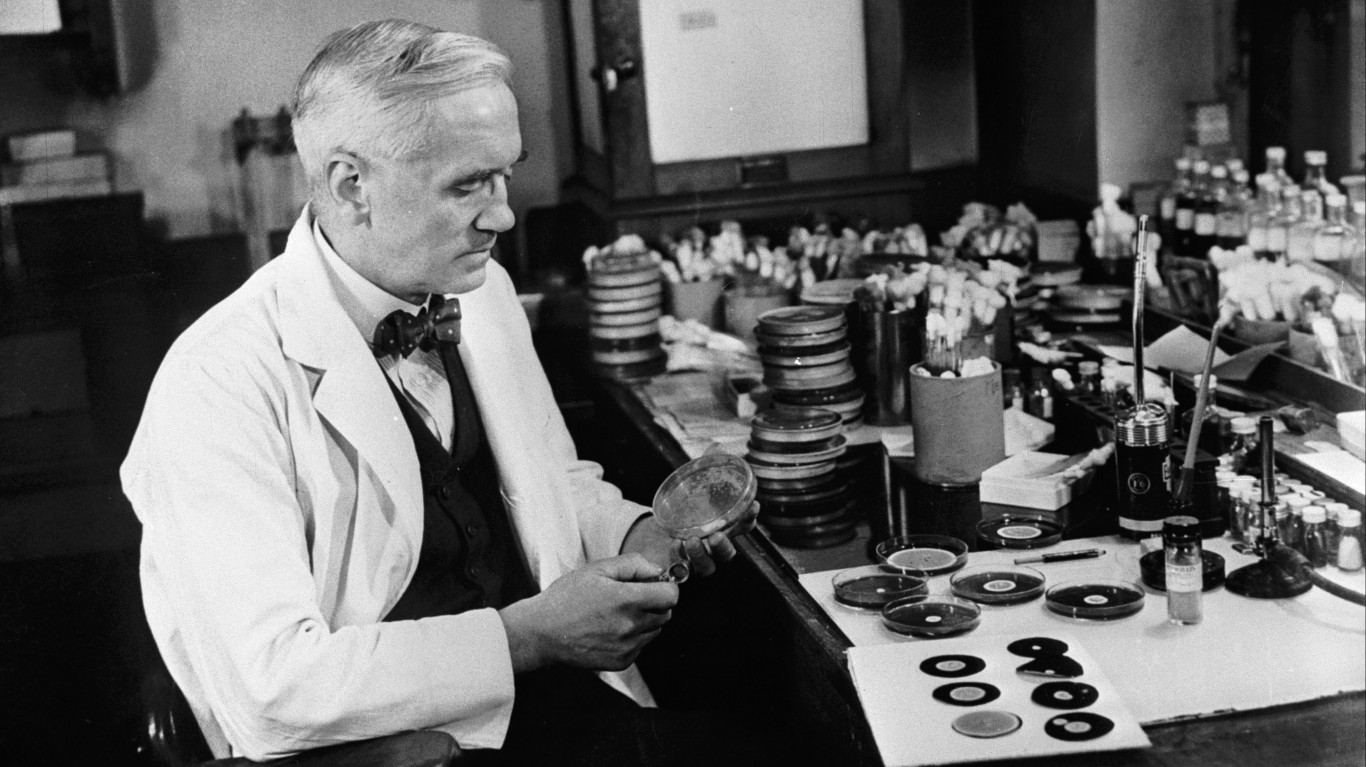

One hundred years ago, you could not get a vaccine for diphtheria, whooping cough, tuberculosis, tetanus, yellow fever, influenza, polio, or typhus. Insulin was first used for diabetes in 1922. Penicillin would not be discovered until 1928, by Sir Alexander Fleming..

Credit card companies are handing out rewards and benefits to win the best customers. A good cash back card can be worth thousands of dollars a year in free money, not to mention other perks like travel, insurance, and access to fancy lounges. See our top picks for the best credit cards today. You won’t want to miss some of these offers.

Flywheel Publishing has partnered with CardRatings for our coverage of credit card products. Flywheel Publishing and CardRatings may receive a commission from card issuers.

Thank you for reading! Have some feedback for us?

Contact the 24/7 Wall St. editorial team.

24/7 Wall St.

24/7 Wall St. 24/7 Wall St.

24/7 Wall St. 24/7 Wall St.

24/7 Wall St.