Technology

The Dirty Expensive Secret of Artificial Intelligence and Machine Learning

Published:

Last Updated:

When it comes to climate change and carbon emissions, the usual suspects get most of the knocks. Gasoline- and diesel-powered vehicles, coal-fired power plants, and big industrial users like aluminum refining and cement plants, for the most part, deserve their bad reputations.

Last year, a smaller, but still environmentally suspect, industry took something of a beating over its use of electricity. Bitcoin mining uses a lot of electricity as networks of thousands of computers paw through mountains of data seeking to verify a transaction and earn a bitcoin in payment. By one estimate, bitcoin mining uses 215 kWh of electricity just to verify one transaction.

Now, a new environmental villain may have just appeared. Artificial intelligence (AI) and machine learning (ML) have been called out in new research by Emma Strubell, Ananya Ganesh, and Andrew McCallum of the University of Massachusetts Amherst. They calculated the carbon footprint of natural-language processing (NLP) based on electricity usage for the hardware needed to train the neural networks that underpin AI and ML.

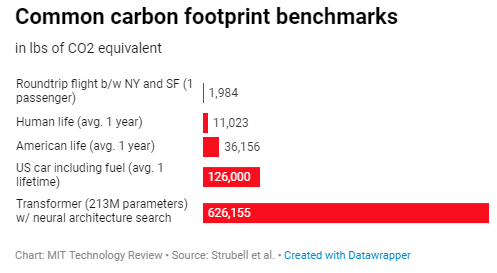

The following chart from the MIT Technology Review shows that training large AI models produces life-cycle carbon emissions nearly five times greater than the life cycle of a typical American car.

While coming up with a suitable application of AI or ML is not all that difficult, the devil is in the details. In this case, the details involve training the machine, a task that can take hours or even days to comb through massive data sets to improve the accuracy of the results the machine returns. As the researchers put it, “[T]hese accuracy improvements depend on the availability of exceptionally large computational resources that necessitate similarly substantial energy consumption. As a result, these models are costly to train and develop, both financially, due to the cost of hardware and electricity or cloud compute time, and environmentally, due to the carbon footprint required to fuel modern tensor processing hardware.” (Tensor processing units (TPUs) are specialized chips developed especially for neural network machine learning.)

The report continues: “Research and development of new models multiplies these costs by thousands of times by requiring retraining to experiment with model architectures and hyperparameters. Whereas a decade ago, most NLP models could be trained and developed on a commodity laptop or server, many now require multiple instances of specialized hardware such as GPUs or TPUs, therefore limiting access to these highly accurate models on the basis of finances.”

For example, a fine-tuning process known as neural architecture search (NAS) generated blinding high costs for “little associated benefit,” according to the research. How high? The training process required more than 656,000 kilowatt-hours of electricity at a carbon cost of more than 310 tons. Cloud-computing time alone costs between about $94,000 and $3.2 million.

Among the research’s conclusions is a recommendation that industry and academia concentrate some effort on finding “more computationally efficient algorithms, as well as hardware that requires less energy.” And just in case you were wondering, the United States was the world’s second-largest carbon dioxide emitter in 2017, spitting out more than 5.3 billion tons of CO2.

Thank you for reading! Have some feedback for us?

Contact the 24/7 Wall St. editorial team.